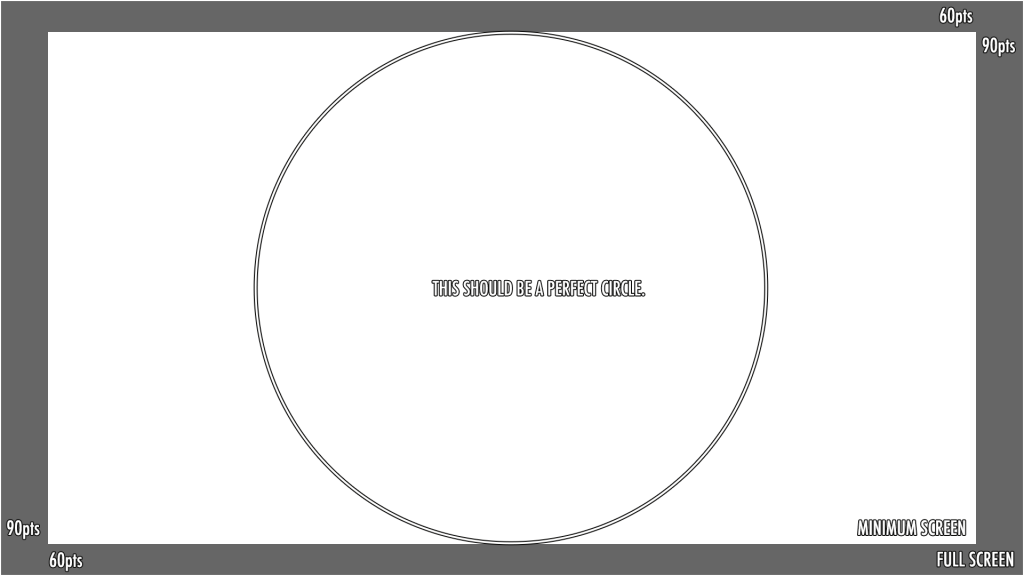

I am developing an app for tvOS. One thing you quickly run into when testing on real world devices is the limits of screensize on several TV models. For this apple added a calibration tool in the settings.

I found I needed this info to actually make my UI layout work for customers. So I took a screenshot and created this screenimage as (PNG) to overlay it at any time in the dev-process for orientation of myself as a Remote Layout Helper).

Download the Overlay Image (use Save as…)

On my main controller I added & called following method which I call in - (void) viewDidAppear:(BOOL)animated:

- (void) activateCalibrationOverlay {

if( DEBUG_CALIBRATION_OVERLAY ) {

if( !overlayImageView ) {

self.overlayImageView = [[UIImageView alloc] initWithImage:[UIImage imageNamed:@"tvOS_screen_size.png"]];

overlayImageView.alpha = 0.0;

overlayImageView.userInteractionEnabled = NO;

[[self appDelegate].window addSubview:overlayImageView];

[[self appDelegate].window bringSubviewToFront:overlayImageView];

if( !overlayPlayPauseGesture ) {

self.overlayPlayPauseGesture = [[UITapGestureRecognizer alloc] initWithTarget:self action:@selector(handleRemoteTapPlayPause:)];

overlayPlayPauseGesture.allowedPressTypes = @[[NSNumber numberWithInteger:UIPressTypePlayPause]];

[self.view addGestureRecognizer:overlayPlayPauseGesture];

}

}

}

}

This adds an overlay of the Remote Layout Helper screen image on TOP of everything else and a gesture recognizer monitoring the PLAY/PAUSE-button of the TV remote. It calls following method:

-(void)handleRemoteTapPlayPause:(UIGestureRecognizer*)tapRecognizer {

if( overlayImageView.alpha == 0.0f ) {

[UIView animateWithDuration:0.3 animations:^{

overlayImageView.alpha = 0.3;

}];

return;

}

if( overlayImageView.alpha == 0.3f ) {

[UIView animateWithDuration:0.3 animations:^{

overlayImageView.alpha = 0.5;

}];

return;

}

if( overlayImageView.alpha == 0.5f ) {

[UIView animateWithDuration:0.3 animations:^{

overlayImageView.alpha = 0.8;

}];

return;

}

if( overlayImageView.alpha == 0.8f ) {

[UIView animateWithDuration:0.3 animations:^{

overlayImageView.alpha = 1.0;

}];

return;

}

if( overlayImageView.alpha == 1.0f ) {

[UIView animateWithDuration:0.3 animations:^{

overlayImageView.alpha = 0.0;

}];

return;

}

}

This helped me a lot doing the right things during development. I hope it helps you too. Feel free to share!

How to use it

You simply press PLAY/PAUSE again and again and the overlay will fade in at different alpha-blending-levels between 0.0 and 1.0. This allows to easily check boundaries of UI elements displayed against the screenlimits.

See following screen example:

Recognize, that you need a retained variable for the UIImageView called overlayImageView and another for the tap gesture recognizer called overlayPlayPauseGesture to be overlaid. And recognize that I use a boolean Precompiler-Flag named DEBUG_CALIBRATION_OVERLAY to switch this feature OFF in deployment.

Why do I blog this? I found it cumbersome to check against real world TV screens if my app works. So I just made sure that most of my UI is usable from within the MINIMUM SCREENSIZE frame the overlay gfx displays to me. Checking this anytime using the remote is a huge plus also on the real device. (Do not forget to disable the code on deployment!)